Optimization Concepts:

Convex sets:

"A convex set is a set of points such that, given any two points A, B in that set, the line AB joining them lies entirely within that set. Intuitively, this means that the set is connected (so that you can pass between any two points without leaving the set) and has no dents in its perimeter.

Convexity/What is a convex set? - Wikibooks, open books for ...

https://en.wikibooks.org › wiki › Convexity › What_is_a_convex_set?"

Convex functions:

"A convex function is a real-valued function defined on an interval with the property that its epigraph (the set of points on or above the graph of the function) is a convex set. Convex minimization is a subfield of optimization that studies the problem of minimizing convex functions over convex sets.

Convex set - Wikipedia

https://en.wikipedia.org › wiki › Convex_set"

Optimization problems:

Interesting simple optimization problems and solutions:

http://tutorial.math.lamar.edu/Classes/CalcI/Optimization.aspx

More Simple Optimization Problems and Solutions:

https://www.khanacademy.org/search?page_search_query=Optimization%20problems%20(calculus)

Basics of convex analysis:

https://en.wikipedia.org/wiki/Convex_analysis

A good overview: http://eceweb.ucsd.edu/~gert/ECE273/CvxOptTutPaper.pdf

least-squares:

"The least squares method is a statistical procedure to find the best fit for a set of data points by minimizing the sum of the offsets or residuals of points from the plotted curve. Least squares regression is used to predict the behavior of dependent variables.Sep 2, 2019

Least Squares Method Definition - Investopedia

https://www.investopedia.com › terms › least-squares-method"

"minimizing the sum of the squares of the residuals made in the results of every single equation."

"The most important application is in data fitting. The best fit in the least-squares sense minimizes the sum of squared residuals (a residual being: the difference between an observed value, and the fitted value provided by a model)."

"Least-squares problems fall into two categories: linear or ordinary least squares and nonlinear least squares, depending on whether or not the residuals are linear in all unknowns. The linear least-squares problem occurs in statistical regression analysis; it has a closed-form solution. The nonlinear problem is usually solved by iterative refinement; at each iteration the system is approximated by a linear one, and thus the core calculation is similar in both cases.

Polynomial least squares describes the variance in a prediction of the dependent variable as a function of the independent variable and the deviations from the fitted curve.

When the observations come from an exponential family and mild conditions are satisfied, least-squares estimates and maximum-likelihood estimates are identical.[1] The method of least squares can also be derived as a method of moments estimator.

The following discussion is mostly presented in terms of linear functions but the use of least squares is valid and practical for more general families of functions. Also, by iteratively applying local quadratic approximation to the likelihood (through the Fisher information), the least-squares method may be used to fit a generalized linear model."

https://en.wikipedia.org/wiki/Least_squares

linear and quadratic programs:

"A linear programming (LP) problem is one in which the objective and all of the constraints are linear functions of the decision variables."

"A quadratic programming (QP) problem has an objective which is a quadratic function of the decision variables, and constraints which are all linear functions of the variables."

"LP problems are usually solved via the Simplex method. "

"An alternative to the Simplex method, called the Interior Point or Newton-Barrier method, was developed by Karmarkar in 1984. Also in the last decade, this method has been dramatically enhanced with advanced linear algebra methods so that it is often competitive with the Simplex method, especially on very large problems."

"Since a QP problem is a special case of a smooth nonlinear problem, it can be solved by a smooth nonlinear optimization method such as the GRG or SQP method. However, a faster and more reliable way to solve a QP problem is to use an extension of the Simplex method or an extension of the Interior Point or Barrier method."

https://www.solver.com/optimization-problem-types-linear-and-quadratic-programming

Quadratic programming

https://optimization.mccormick.northwestern.edu/index.php/Quadratic_programming

semidefinite programming:

"Semidefinite programming - Wikipedia

https://en.wikipedia.org › wiki › Semidefinite_programming

Semidefinite programming (SDP) is a subfield of convex optimization concerned with the optimization of a linear objective function (a user-specified function that the user wants to minimize or maximize) over the intersection of the cone of positive semidefinite matrices with an affine space, i.e., a spectrahedron.

Motivation and definition · Duality theory · Examples · Algorithms"

"Semidefinite Programming

https://web.stanford.edu › ~boyd › papers › sdp

In semidefinite programming we minimize a linear function subject to the constraint that an affine combination of symmetric matrices is positive semidefinite. Such a constraint is nonlinear and nonsmooth, but convex, so positive definite programs are convex optimization problems."

https://ocw.mit.edu/courses/electrical-engineering-and-computer-science/6-251j-introduction-to-mathematical-programming-fall-2009/readings/MIT6_251JF09_SDP.pdf

minimax:

"Minimax - Wikipedia

https://en.wikipedia.org › wiki › Minimax

Minimax (sometimes MinMax, MM or saddle point) is a decision rule used in artificial intelligence, decision theory, game theory, statistics and philosophy for minimizing the possible loss for a worst case (maximum loss) scenario. When dealing with gains, it is referred to as "maximin"—to maximize the minimum gain.""

duality theory:

"In mathematical optimization theory, duality or the duality principle is the principle that optimization problems may be viewed from either of two perspectives, the primal problem or the dual problem. The solution to the dual problem provides a lower bound to the solution of the primal (minimization) problem.

Duality (optimization) - Wikipedia

https://en.wikipedia.org › wiki › Duality_(optimization)"

"Usually the term "dual problem" refers to the Lagrangian dual problem but other dual problems are used – for example, the Wolfe dual problem and the Fenchel dual problem. The Lagrangian dual problem is obtained by forming the Lagrangian of a minimization problem by using nonnegative Lagrange multipliers to add the constraints to the objective function, and then solving for the primal variable values that minimize the original objective function. This solution gives the primal variables as functions of the Lagrange multipliers, which are called dual variables, so that the new problem is to maximize the objective function with respect to the dual variables under the derived constraints on the dual variables (including at least the nonnegativity constraints)."

theorems of alternative:

"Farkas' lemma belongs to a class of statements called "theorems of the alternative": a theorem stating that exactly one of two systems has a solution.

Farkas' lemma - Wikipedia

https://en.wikipedia.org › wiki › Farkas'_lemma"

"In layman's terms, a Theorem of the Alternative is a theorem which states that given two conditions, one of the two conditions is true. It further states that if one of those conditions fails to be true, then the other condition must be true.May 8, 1991"

http://digitalcommons.iwu.edu/cgi/viewcontent.cgi?article=1000&context=math_honproj

theorems of alternative applications; : https://link.springer.com/article/10.1007/BF00939083

interior-point methods:

"Interior-point methods (also referred to as barrier methods or IPMs) are a certain class of algorithms that solve linear and nonlinear convex optimization problems.""

https://en.wikipedia.org/wiki/Interior-point_method

Applications of signal processing:

"Applications of DSP include audio signal processing, audio compression, digital image processing, video compression, speech processing, speech recognition, digital communications, digital synthesizers, radar, sonar, financial signal processing, seismology and biomedicine.

Digital signal processing - Wikipedia

https://en.wikipedia.org › wiki › Digital_signal_processing"

Applications of optimization to signal processing:

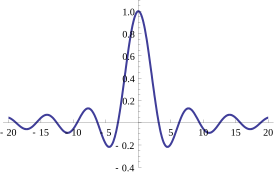

"Convex optimization has been used in signal processing for a long time, to choose coefficients for use in fast (linear) algorithms, such as in filter or array design; more recently, it has been used to carry out (nonlinear) processing on the signal itself."

https://web.stanford.edu/~boyd/papers/rt_cvx_sig_proc.html

Kinect Audio Signal Optimization:

https://www.microsoft.com/en-us/research/wp-content/uploads/2016/02/ivantash-optimization_methods_and_their_applications_in_dsp.pdf

statistics and machine learning:

"The Actual Difference Between Statistics and Machine Learning

https://towardsdatascience.com › the-actual-difference-between-statistics-an...

Mar 24, 2019 - “The major difference between machine learning and statistics is their purpose. Machine learning models are designed to make the most accurate predictions possible. Statistical models are designed for inference about the relationships between variables.” ... Statistics is the mathematical study of data."

Machine Learning vs Statistics - KDnuggets

https://www.kdnuggets.com › 2016/11 › machine-learning-vs-statistics

Machine learning is all about predictions, supervised learning, and unsupervised learning, while statistics is about sample, population, and hypotheses. But are ...

https://www.kdnuggets.com/2016/11/machine-learning-vs-statistics.html

The Close Relationship Between Applied Statistics and Machine Learning

https://machinelearningmastery.com/relationship-between-applied-statistics-and-machine-learning/

Control and mechanical engineering:

"Control engineering is the engineering discipline that focuses on the modeling of a diverse range of dynamic systems (e.g. mechanical systems) and the design of controllers that will cause these systems to behave in the desired manner. ... In most cases, control engineers utilize feedback when designing control systems." https://en.wikipedia.org/wiki/Control_engineering

Digital and analog circuit design:

"With the advent of logic synthesis, one of the biggest challenges faced by the electronic design automation (EDA) industry was to find the best netlist representation of the given design description. While two-level logic optimization had long existed in the form of the Quine–McCluskey algorithm, later followed by the Espresso heuristic logic minimizer, the rapidly improving chip densities, and the wide adoption of HDLs for circuit description, formalized the logic optimization domain as it exists today." https://en.wikipedia.org/wiki/Logic_optimization

The analysis and optimization algorithms of the electronic circuits design

https://www.researchgate.net/publication/269211254_The_analysis_and_optimization_algorithms_of_the_electronic_circuits_design

Optimization Methods in Finance

http://web.math.ku.dk/~rolf/CT_FinOpt.pdf

Optimization Models and Methods with Applications in Finance: http://www.bcamath.org/documentos_public/courses/Nogales_2012-13_02_18-22.pdf

Optimization for financial engineering: a special issue: https://link.springer.com/article/10.1007/s11081-017-9358-1

----

Sayed Ahmed

BSc. Eng. in Comp. Sc. & Eng. (BUET)

MSc. in Comp. Sc. (U of Manitoba, Canada)

MSc. in Data Science and Analytics (Ryerson University, Canada)

Linkedin: https://ca.linkedin.com/in/sayedjustetc

Blog: http://Bangla.SaLearningSchool.com, http://SitesTree.com

Online and Offline Training: http://Training.SitesTree.com

Get access to courses on Big Data, Data Science, AI, Cloud, Linux, System Admin, Web Development and Misc. related. Also, create your own course to sell to others to earn a revenue.

http://sitestree.com/training/

If you want to contribute to the operation of this site (Bangla.SaLearn) including occasional free and/or low cost online training (using Zoom.us): http://Training.SitesTree.com (or charitable/non-profit work in the education/health/social service sector), you can financially contribute to: safoundation at salearningschool.com using Paypal or Credit Card (on http://sitestree.com/training/enrol/index.php?id=114 ).

Affiliate Links: Deals on Amazon :

Hottest Deals on Amazon USA: http://tiny.cc/38lddz

Hottest Deals on Amazon CA: http://tiny.cc/bgnddz

Hottest Deals on Amazon Europe: http://tiny.cc/w4nddz

is said to be positive definite if the scalar

is said to be positive definite if the scalar  is strictly positive for every non-zero column

is strictly positive for every non-zero column  of

of  real numbers. Here

real numbers. Here  denotes the

denotes the  as the output of an operator,

as the output of an operator,

matrix via an extension of the

matrix via an extension of the  is a factorization of the form

is a factorization of the form  , where

, where  is an

is an  real or complex

real or complex  is an

is an  are real

are real

"

"

Bayesian model selection uses the rules of probability theory to select among different hypotheses. It is completely analogous to Bayesian classification."

Bayesian model selection uses the rules of probability theory to select among different hypotheses. It is completely analogous to Bayesian classification." with a

with a  is a

is a  is an

is an  is known as a quadratic form in

is known as a quadratic form in  is a quadratic form in the variables x and y. The coefficients usually belong to a fixed field K, such as the real or complex numbers, and we speak of a quadratic form over K."

is a quadratic form in the variables x and y. The coefficients usually belong to a fixed field K, such as the real or complex numbers, and we speak of a quadratic form over K."

. It also follows, from

. It also follows, from ![{\displaystyle \operatorname {E} \left[\varepsilon ^{T}\Lambda \varepsilon \right]=\operatorname {tr} \left[\Lambda \Sigma \right]+\mu ^{T}\Lambda \mu }](https://wikimedia.org/api/rest_v1/media/math/render/svg/baad183f5bdae8ceea0ab20ebb804d7767187c36)

and

and  are the

are the

![x \in (a,b]](https://wikimedia.org/api/rest_v1/media/math/render/svg/195daff93170c251e2afe78e50653ccf0f039dac)

![[eq4]](https://i0.wp.com/www.statlect.com/images/covariance-formula__11.png?w=474&ssl=1)

![[eq1]](https://i0.wp.com/www.statlect.com/images/covariance-formula__3.png?w=474&ssl=1)

is useful. When

is useful. When  the right-hand side

the right-hand side  and the inequality is trivial as all probabilities are ≤ 1.

and the inequality is trivial as all probabilities are ≤ 1. shows that the probability that values lie outside the interval

shows that the probability that values lie outside the interval  does not exceed

does not exceed  .

.

); then we can rewrite the previous inequality as

); then we can rewrite the previous inequality as has density

has density  , where

, where ![\Pr[a\leq X\leq b]=\int _{a}^{b}f_{X}(x)\,dx.](https://wikimedia.org/api/rest_v1/media/math/render/svg/45fd7691b5fbd323f64834d8e5b8d4f54c73a6f8)

is the

is the

as being the probability of

as being the probability of ![[x,x+dx]](https://wikimedia.org/api/rest_v1/media/math/render/svg/f07271dbe3f8967834a2eaf143decd7e41c61d7a) ."

."